In recent years, multi-modal interfaces supporting different input techniques surfaced. In general, these techniques go beyond the traditional keyboard and mouse interaction (WIMP) by providing speech, pen, touch, gaze or gesture input. To provide users with natural interaction, we have started to investigate the combination of different input techniques, namely, the combination of touch and pen input.

In recent years, multi-modal interfaces supporting different input techniques surfaced. In general, these techniques go beyond the traditional keyboard and mouse interaction (WIMP) by providing speech, pen, touch, gaze or gesture input. To provide users with natural interaction, we have started to investigate the combination of different input techniques, namely, the combination of touch and pen input.

We think it is beneficial to combine touch and pen input and we implemented ideas and concepts how to efficiently combine these different input

modalities, since people expect to interact quite differently with their fingers than with pens.

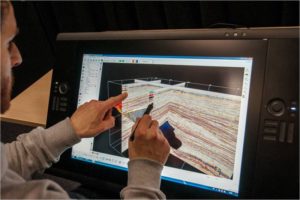

This Pen and Touch application for Seismic Interpretation extends the open source seismic interpretation framework OpendTect with the functionality to simultaneously handle pen and touch input. It not only adds true multi-touch navigation to the 3D viewer of OpendTect, but also simultaneous pen and touch combinations for simplifying common tasks like slice manipulation and horizon tracing.

The Pen and Touch application for Seismic Interpretation runs under the Windows operating system. It uses itself the following soft- and hardware frameworks:

The Pen and Touch application for Seismic Interpretation is available for the members of the VRGeo Consortium.

Related Publication

- Ömer Genç: Novel Pen & Touch based Interaction Techniques for Seismic Interpretation, Master of Science Thesis, University of Applied Sciences Düsseldorf, Department of Media, July 2013