In order to enhance recovery from existing fields, to explore new hydrocarbon sources by entering new challenging operational frontiers, and to reduce the environmental footprint, information technology is crucial.

The VRGeo Consortium is a consortium of the international oil and gas industry. The main goal of this consortium is the development and evaluation of interactive hardware and software technologies for visualization and interpretation systems in the oil and gas industry.

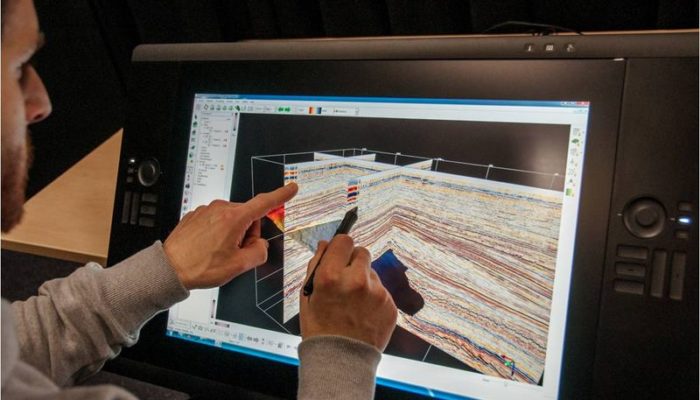

The work in the VRGeo Consortium is addressing oil and gas integrated visualization and interpretation workflows from personal to presentation environments spanning the continuum from tablet-PCs to desktops to small collaboration scenarios to large scale solutions.

Major challenges and objectives are:

- Big Geoscience Data Visualization and Analytics

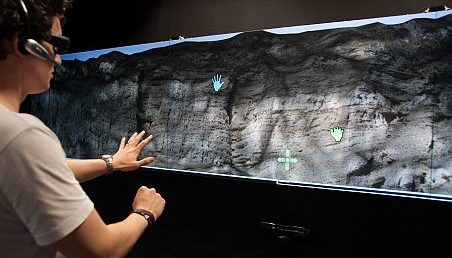

- Novel Human Computer Interaction (HCI) Paradigms and Technologies

- Small and Large-scale Collaborative Environments

The VRGeo R&D Agenda as defined by the VRGeo Consortium Steering Committee encompasses the following activities and projects:

- Deep Learning using Convolutional Neural Networks (CNNs) on Large Geoscience Data

- Large Geo Data Visualization

- Natural 3D Human Computer Interaction (HCI)

- Collaborative Environments and Workflows

- Technology Watch and Innovation Scouting

- Performance Comparisons, Benchmarking and User Studies

For oil & gas companies, the VRGeo Consortium is a perfect opportunity to share experiences, ideas, challenges, and resources for basic research.